Reports

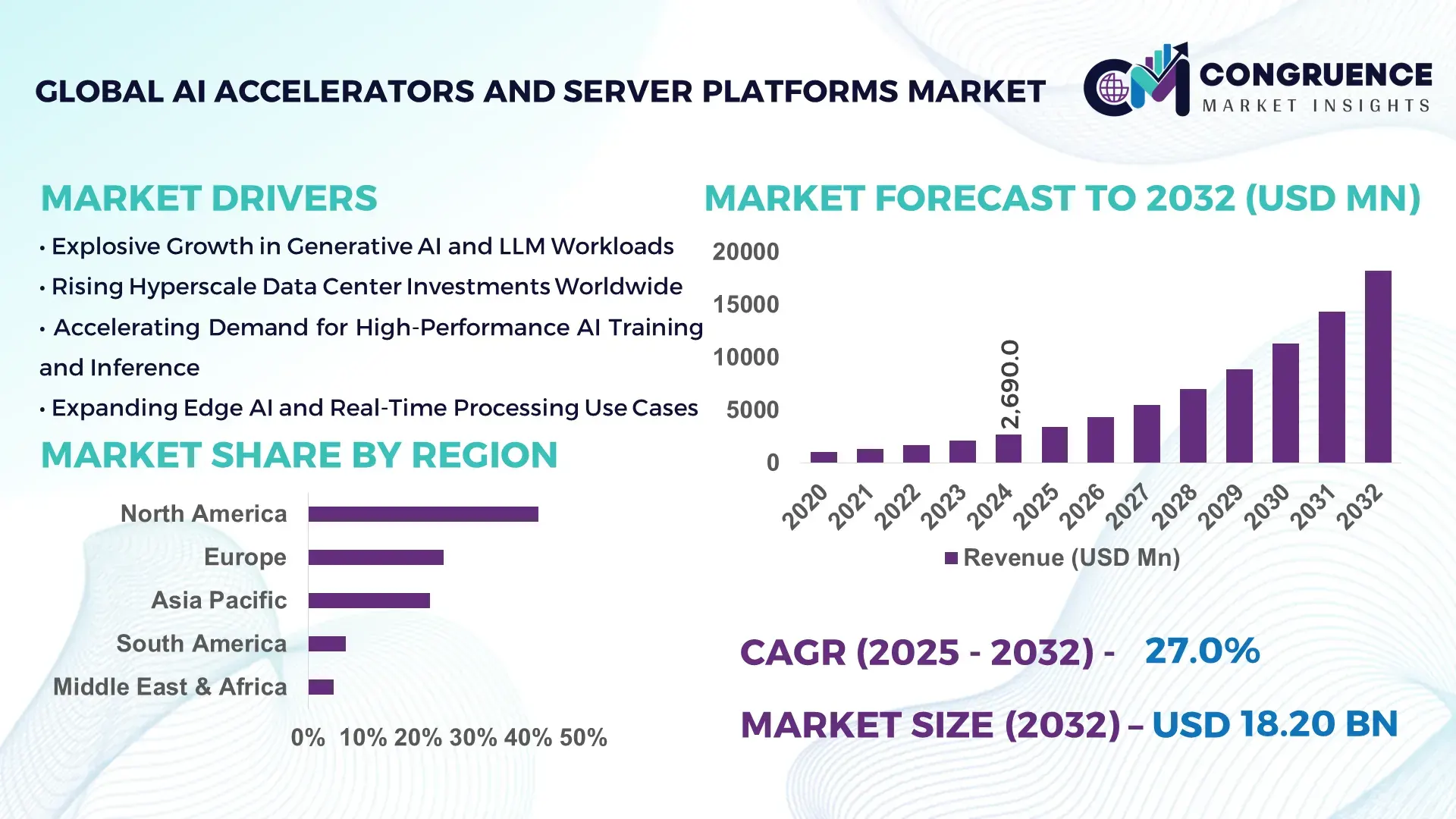

The Global AI Accelerators and Server Platforms Market was valued at USD 2,690.0 Million in 2024 and is anticipated to reach a value of USD 18,204.6 Million by 2032, expanding at a CAGR of 27% between 2025 and 2032, according to an analysis by Congruence Market Insights. This growth is driven by the rapid scaling of generative AI workloads, hyperscale data center expansion, and rising enterprise demand for high-performance, energy-efficient compute infrastructure.

The United States represents the dominant country in the AI Accelerators and Server Platforms Market, supported by large-scale production capacity, deep capital investment, and advanced deployment across industries. In 2024, the U.S. hosted over 45% of global hyperscale data centers, with more than 5,300 operational facilities, many optimized for AI workloads. Annual investments in AI infrastructure exceeded USD 60 billion, with strong concentration in GPU clusters, AI-optimized servers, and liquid-cooling technologies. Key industry applications include cloud computing, defense analytics, autonomous systems, and healthcare AI, where over 70% of large hospitals use AI-enabled server platforms for imaging and diagnostics. Technological advancements include large-scale adoption of AI accelerators exceeding 700 watts per chip, custom silicon integration, and rack-scale architectures delivering over 10× performance density improvements compared to legacy server systems.

Market Size & Growth: Valued at USD 2,690.0 Million in 2024, projected to reach USD 18,204.6 Million by 2032, growing at a CAGR of 27%, driven by exponential AI model complexity and compute intensity

Top Growth Drivers: Enterprise AI adoption 68%, inference efficiency improvement 42%, data center AI workload penetration 55%

Short-Term Forecast: By 2028, AI-optimized servers are expected to deliver 35% lower energy cost per workload

Emerging Technologies: Liquid-cooled AI racks, custom AI ASICs, disaggregated server architectures

Regional Leaders: North America USD 7,900 Million by 2032 (cloud AI dominance); Asia Pacific USD 5,400 Million (manufacturing AI adoption); Europe USD 3,600 Million (energy-efficient AI systems)

Consumer/End-User Trends: Cloud service providers and large enterprises account for over 65% of deployments

Pilot or Case Example: In 2023, a U.S. hyperscaler reduced AI training time by 40% using next-gen accelerator clusters

Competitive Landscape: NVIDIA ~45%, followed by AMD, Intel, Google, and AWS

Regulatory & ESG Impact: AI data center energy-efficiency mandates targeting 30% reduction in power intensity

Investment & Funding Patterns: Over USD 120 Billion invested globally in AI compute infrastructure since 2021

Innovation & Future Outlook: Rack-scale AI systems and accelerator-server co-design shaping next-generation deployments

The AI Accelerators and Server Platforms Market is shaped by cloud computing (42% of deployments), enterprise AI workloads (33%), and research and government applications (25%). Recent innovations include liquid-cooled accelerator servers, chiplet-based AI processors, and AI-optimized networking. Regulatory focus on energy efficiency, rising regional AI adoption in Asia Pacific, and growing demand for sovereign AI infrastructure are defining future growth trajectories.

The AI Accelerators and Server Platforms Market has become strategically critical as organizations transition from experimental AI to production-scale deployment. Enterprises increasingly view AI infrastructure as a core capability supporting competitiveness, operational efficiency, and digital sovereignty. Advanced accelerators paired with AI-optimized server platforms now enable real-time inference, large-scale model training, and edge-to-cloud AI orchestration across industries such as finance, healthcare, manufacturing, and defense.

Technological differentiation is accelerating. Liquid-cooled AI server platforms deliver up to 35% higher thermal efficiency compared to traditional air-cooled server standards, enabling higher compute density per rack. Regionally, North America dominates in deployment volume, while Asia Pacific leads in adoption, with over 58% of large enterprises integrating AI accelerators into production environments. Short-term projections indicate that by 2027, AI-driven workload orchestration is expected to reduce compute idle time by 30%, directly improving infrastructure utilization.

Compliance and ESG considerations are increasingly embedded in procurement strategies. Firms are committing to energy-efficiency improvements of 25% and water-usage reduction of 20% by 2030 through next-generation cooling and power management technologies. In 2024, Japan achieved a 28% reduction in data-center energy intensity through national deployment of AI-optimized, low-power server platforms.

Looking ahead, tighter integration between accelerators, networking, and software stacks will position the AI Accelerators and Server Platforms Market as a pillar of operational resilience, regulatory compliance, and sustainable digital growth.

The AI Accelerators and Server Platforms Market is shaped by rapid advancements in compute architectures, increasing AI workload density, and the shift toward scalable, energy-efficient data center designs. Enterprises are prioritizing high-performance accelerators and optimized server platforms to handle training and inference workloads that now require petaflop-level compute. Market dynamics are influenced by evolving enterprise IT strategies, sovereign AI initiatives, and increasing demand for low-latency processing. Supply chain localization, semiconductor capacity expansion, and growing adoption of AI-as-a-service models are also reshaping procurement and deployment decisions across regions.

Enterprise adoption of AI workloads is a primary growth driver for the AI Accelerators and Server Platforms Market. Over 65% of large enterprises now deploy AI for analytics, automation, and customer engagement, increasing demand for accelerator-enabled servers. Training workloads have grown in complexity by over 50% in the past three years, requiring specialized hardware capable of parallel processing and high memory bandwidth. Industries such as finance and healthcare report productivity improvements exceeding 30% after deploying AI-optimized server platforms, reinforcing sustained infrastructure investment.

Power availability and infrastructure readiness pose key restraints. AI accelerator servers consume up to 3× more power than conventional servers, creating grid stress in dense data center regions. Nearly 40% of planned AI data center projects faced delays in 2024 due to power and cooling limitations. Additionally, legacy facilities struggle to retrofit for liquid cooling and high-density racks, limiting rapid deployment despite strong demand.

Sovereign AI initiatives represent a major opportunity. Governments are investing heavily in domestic AI compute infrastructure to support national security, healthcare, and public services. More than 25 countries announced national AI compute programs by 2024, many prioritizing locally hosted accelerator-based server platforms. These initiatives are driving demand for region-specific designs, secure architectures, and long-term infrastructure contracts.

System integration remains a challenge due to the complexity of combining accelerators, networking, storage, and software stacks. Over 30% of enterprises report delays in AI infrastructure deployment due to compatibility and optimization issues. Shortages of skilled AI infrastructure engineers further increase deployment time and operational risk, particularly for mid-sized organizations.

Expansion of Modular AI Data Center Infrastructure: Modular and prefabricated AI server deployments increased by 48% between 2022 and 2024, enabling faster installation timelines by up to 40%. Over 55% of new AI infrastructure projects report cost efficiency gains through modular designs supporting high-density accelerator racks.

Rapid Shift Toward Liquid-Cooled Accelerator Servers: Liquid cooling adoption reached 38% of new AI server installations in 2024, reducing thermal losses by 30% and enabling rack power densities above 100 kW. This trend is especially strong in North America and Northern Europe.

Integration of Custom AI Accelerators: Custom and semi-custom AI accelerators now account for 27% of deployments, improving workload-specific performance by up to 45% compared to general-purpose GPUs. Cloud providers are leading adoption to optimize inference efficiency.

Growth in Edge-AI Server Platforms: Edge-deployed AI servers grew by 41% in unit installations, supporting low-latency applications such as smart manufacturing and autonomous systems. Enterprises report latency reductions of 50% for real-time AI workloads using localized accelerator platforms.

The AI Accelerators and Server Platforms Market is segmented by type, application, and end-user, reflecting the diversity of compute architectures, deployment models, and consumption patterns shaping AI infrastructure demand. By type, the market spans GPU-based accelerators, custom AI ASICs, FPGAs, and AI-optimized server platforms designed for training- and inference-intensive workloads. Each type addresses distinct performance, power-efficiency, and scalability requirements. Application-wise, demand is concentrated in cloud computing, data analytics, machine learning training, real-time inference, and edge AI deployments, driven by rising enterprise digitalization and AI model complexity. From an end-user perspective, hyperscale cloud providers, large enterprises, research institutions, and government agencies dominate deployments, while SMEs are emerging as an important growth cohort through AI-as-a-service access models. Segmentation highlights a clear shift toward tightly integrated accelerator–server ecosystems, increased workload specialization, and broader adoption across both centralized data centers and distributed edge environments.

GPU-based AI accelerators currently represent the leading type, accounting for approximately 46% of total adoption, due to their flexibility, mature software ecosystems, and suitability for both training and inference workloads. These platforms are widely used across cloud data centers and enterprise AI environments, supporting large language models and computer vision tasks at scale. Custom AI ASICs follow closely, driven by their ability to deliver 30–40% higher workload-specific efficiency compared to general-purpose accelerators. The fastest-growing type is custom and semi-custom AI ASICs, expanding at an estimated 32% CAGR, fueled by cloud providers and large enterprises seeking lower power consumption, predictable performance, and tighter hardware–software co-design. FPGA-based accelerators and edge-focused AI servers serve niche use cases such as low-latency inference, industrial automation, and telecom workloads, together contributing a combined share of roughly 24%.

Cloud computing and hyperscale data center workloads form the leading application segment, representing about 44% of total usage, as AI training and inference increasingly shift to centralized, scalable environments. These platforms support generative AI, recommendation engines, and enterprise analytics, requiring high-density accelerator clusters and advanced networking. Real-time inference and edge AI applications are the fastest-growing application area, advancing at an estimated 29% CAGR, supported by demand for low-latency processing in autonomous systems, smart manufacturing, and intelligent surveillance. While training workloads dominate compute intensity, inference workloads are growing faster in volume, particularly across distributed environments. Other applications—such as scientific research, healthcare diagnostics, and financial modeling—collectively account for a combined share of nearly 26%. Consumer and enterprise adoption trends reinforce this shift. In 2024, over 40% of global enterprises reported piloting AI accelerator-enabled platforms for customer experience and decision automation. In the U.S., 42% of hospitals are testing AI systems that combine imaging data with electronic health records, relying heavily on accelerator-backed server platforms.

Hyperscale cloud service providers are the leading end-user segment, accounting for approximately 48% of deployments, driven by large-scale AI model training, multi-tenant inference services, and AI-as-a-service offerings. These organizations prioritize high-performance accelerators, rack-scale server platforms, and advanced cooling systems to support dense compute environments. The fastest-growing end-user group is SMEs and mid-sized enterprises, expanding at an estimated 26% CAGR, enabled by managed AI platforms and cloud-based access to accelerator infrastructure. This cohort increasingly adopts AI for marketing analytics, supply chain optimization, and customer engagement without owning physical hardware. Large enterprises in sectors such as finance, healthcare, and manufacturing contribute a combined share of about 34%, with adoption rates exceeding 60% in financial services and 45% in manufacturing operations. Adoption statistics highlight behavioral trends: in 2024, 38% of enterprises globally reported active pilots of AI accelerator-backed platforms, while over 60% of Gen Z consumers expressed higher trust in brands using AI-driven support systems.

North America accounted for the largest market share at 41.8% in 2024, however, Asia-Pacific is expected to register the fastest growth, expanding at a CAGR of 31.2% between 2025 and 2032.

Regional performance in the AI Accelerators and Server Platforms Market is shaped by differences in data center density, AI investment intensity, semiconductor manufacturing capacity, and enterprise digital maturity. North America leads in hyperscale AI server deployments, with over 55% of global AI training workloads hosted in the region. Europe follows with approximately 24.6% share, driven by regulated enterprise adoption and sustainability-led infrastructure upgrades. Asia-Pacific holds nearly 22.1% share, supported by large-scale cloud expansion, domestic chip programs, and rising AI usage across manufacturing and e-commerce. South America and the Middle East & Africa together account for around 11.5%, reflecting emerging-stage adoption focused on localized AI use cases, government digitization programs, and energy-sector modernization.

North America represents approximately 41.8% of the global AI Accelerators and Server Platforms Market, supported by high data center concentration and enterprise AI maturity. The region hosts over 5,300 large-scale data centers, many optimized for accelerator-heavy workloads. Key industries driving demand include cloud computing, financial services, healthcare diagnostics, defense analytics, and autonomous systems. Government support is visible through federal AI funding programs exceeding USD 25 billion and incentives for advanced semiconductor manufacturing. Technologically, the region leads in liquid-cooled AI servers, rack-scale architectures, and custom accelerator integration. A major local player has expanded AI-focused server manufacturing capacity by 35% to support next-generation GPU and ASIC deployments. Consumer behavior shows higher enterprise AI adoption in healthcare and finance, where over 60% of large organizations use accelerator-backed platforms for real-time analytics and decision automation.

Europe accounts for roughly 24.6% of the global market, with strong demand from Germany, the UK, and France, which together contribute more than 58% of regional deployments. The market is shaped by regulatory oversight, sustainability mandates, and enterprise modernization initiatives. Regulatory bodies emphasize energy efficiency, data sovereignty, and explainable AI, pushing demand for compliant server platforms. Over 45% of new AI server installations in Europe now prioritize low-power accelerators and advanced cooling technologies. Adoption of emerging technologies such as confidential computing and AI workload isolation is rising. A regional technology provider has deployed energy-optimized AI servers across multiple EU countries, achieving 28% lower power intensity. Consumer behavior reflects regulatory pressure, with enterprises increasingly demanding transparent and auditable AI infrastructure for critical workloads.

Asia-Pacific ranks second globally by volume, holding approximately 22.1% market share, with China, Japan, and India as the top consuming countries. The region operates over 2,800 hyperscale and enterprise data centers, with rapid expansion underway. Manufacturing and cloud infrastructure investments dominate, particularly in semiconductor fabs and AI-ready server assembly. Regional innovation hubs in Shenzhen, Tokyo, Bengaluru, and Seoul are driving accelerator customization and edge AI platforms. A leading regional player has increased domestic AI server production output by 40% to reduce import dependency. Consumer behavior shows strong growth driven by e-commerce, mobile AI applications, and smart manufacturing, with over 50% of digital-native enterprises deploying AI accelerators for personalization and automation.

South America contributes approximately 6.9% of global market share, led by Brazil and Argentina. Infrastructure investments focus on telecom expansion, cloud localization, and energy-efficient data centers. Over 65% of AI infrastructure deployments are concentrated in urban economic hubs. Governments are offering tax incentives and import-duty reductions for advanced IT equipment to support digital transformation. A regional cloud service provider has launched accelerator-backed data centers serving media and language-processing workloads. Consumer behavior indicates demand tied closely to media analytics, content localization, and multilingual AI systems, especially in streaming and digital advertising platforms.

The Middle East & Africa region holds approximately 4.6% market share, with the UAE and South Africa leading adoption. Demand is driven by oil & gas analytics, smart city development, and government-led digital transformation. Over 30 national AI programs are active across the region, supporting accelerator-enabled server deployments. Technological modernization includes AI-powered surveillance, predictive maintenance, and financial digitization. A regional technology firm has partnered with global hardware vendors to deploy AI-ready data centers capable of handling 20+ megawatts of compute load. Consumer behavior varies by country, with higher adoption in public-sector services and financial platforms.

United States – 38.6% Market Share: Strong dominance due to large-scale data center capacity, advanced semiconductor ecosystem, and high enterprise AI adoption.

China – 17.9% Market Share: Leadership supported by domestic accelerator production, extensive cloud infrastructure, and large-scale industrial AI deployment.

The AI Accelerators and Server Platforms Market is moderately consolidated, characterized by a small group of dominant technology providers and a wider base of specialized and regional competitors. More than 35 active companies operate globally across accelerator chips, AI-optimized servers, interconnects, and integrated rack-scale systems. The top five companies collectively account for approximately 68–72% of total deployments, reflecting strong concentration at the high-performance end of the market, particularly in hyperscale and enterprise AI environments.

Leading players maintain competitive positioning through vertical integration, custom silicon development, and ecosystem control, while smaller firms focus on inference-optimized accelerators, edge AI servers, or energy-efficient architectures. Strategic initiatives include multi-year supply agreements with hyperscale cloud providers, co-design partnerships between chipmakers and server OEMs, and accelerated product launch cycles averaging 12–18 months. Innovation competition centers on performance-per-watt improvements exceeding 30% per generation, liquid-cooling readiness, and high-bandwidth memory scalability. The market also shows increasing competitive intensity in Asia-Pacific, where domestic vendors are expanding accelerator output capacity by 25–40% annually to reduce reliance on imports.

Intel Corporation

Amazon Web Services (AWS)

Google LLC

Microsoft Corporation

Super Micro Computer, Inc.

Dell Technologies

Huawei Technologies

Qualcomm

Technology evolution in the AI Accelerators and Server Platforms Market is driven by the need to support increasingly complex AI models requiring extreme parallelism, memory bandwidth, and power efficiency. Modern AI accelerators now exceed 700 watts per chip, enabling dense compute clusters capable of handling trillion-parameter models. High-bandwidth memory integration has become standard, with leading platforms supporting up to 192 GB HBM per accelerator, delivering memory bandwidth above 5 TB/s.

Server platform design is shifting toward rack-scale and pod-based architectures, allowing thousands of accelerators to operate as a unified compute fabric. Liquid cooling adoption has surpassed 38% of new AI server installations, enabling rack densities above 100 kW while reducing thermal losses by nearly 30%. Interconnect technologies such as NVLink-class and Ethernet-based fabrics exceeding 800 Gbps are improving node-to-node communication efficiency.

Emerging technologies include custom AI ASICs optimized for inference workloads, chiplet-based accelerator designs improving yield by 20–25%, and AI-driven workload orchestration software that improves utilization rates beyond 85%. Edge AI server platforms are also evolving, with compact accelerators delivering 10–15× performance gains over CPU-only systems for real-time analytics. Collectively, these technologies are redefining how AI infrastructure is designed, deployed, and scaled.

In March 2024, NVIDIA announced the arrival of its Blackwell platform, featuring next-generation GPUs, NVLink connectivity, and enhanced Tensor Cores that boost training and inference efficiency for massive AI models up to trillion-parameter scales, enabling broader adoption across cloud, enterprise, and HPC environments. Source: www.nvidia.com

In May 2024, Dell Technologies expanded its Dell AI Factory with NVIDIA integrations, unveiling upcoming AI-optimized PowerEdge servers supporting Blackwell-based platforms such as HGX B300 NVL16 and RTX PRO Blackwell editions, offering up to 288 GB of HBM3e memory for advanced enterprise AI and HPC workloads. Source: www.dell.com

In October 2024, AMD launched the 5th-generation EPYC server CPUs and Instinct MI325X accelerators at Advancing AI 2024, with partners including Google Cloud, Microsoft Azure, and Oracle Cloud deploying these solutions to power high-performance AI tasks and support more than one million models. Source: www.amd.com

In late 2025, Qualcomm announced the forthcoming AI200 and AI250 inference accelerators and rack-scale systems designed for data centers, positioning the company as a new competitor to incumbent accelerator builders for inference workloads and prompting notable stock movements. Source: www.tomshardware.com

The AI Accelerators and Server Platforms Market Report provides comprehensive coverage of the global ecosystem supporting artificial intelligence compute infrastructure. The scope includes detailed analysis of accelerator types such as GPU-based platforms, custom AI ASICs, FPGAs, and inference-optimized processors, alongside server platform architectures spanning rack-scale systems, modular servers, and edge-deployed AI hardware. The report evaluates applications across cloud computing, machine learning training, real-time inference, scientific research, healthcare diagnostics, financial analytics, industrial automation, and smart infrastructure.

Geographic coverage spans North America, Europe, Asia-Pacific, South America, and the Middle East & Africa, with granular insights into regional deployment patterns, infrastructure readiness, and technology adoption. The scope further examines end-user segments including hyperscale cloud providers, large enterprises, SMEs, research institutions, and government agencies. Technology coverage includes interconnects, memory architectures, cooling systems, power management, and AI workload orchestration software.

Additionally, the report addresses emerging segments such as sovereign AI infrastructure, edge AI server deployments, and sustainability-focused data center designs. It provides strategic insights into competitive positioning, innovation trajectories, regulatory considerations, and future infrastructure planning, offering decision-makers a clear, data-driven view of the market’s structure, evolution, and long-term relevance.

| Report Attribute / Metric | Details |

|---|---|

| Market Revenue (2024) | USD 2,690.0 Million |

| Market Revenue (2032) | USD 18,204.6 Million |

| CAGR (2025–2032) | 27.0% |

| Base Year | 2024 |

| Forecast Period | 2025–2032 |

| Historic Period | 2020–2024 |

| Segments Covered |

By Type

By Application

By End-User Insights

|

| Key Report Deliverables | Revenue Forecast, Market Trends, Growth Drivers & Restraints, Technology Insights, Segmentation Analysis, Regional Insights, Competitive Landscape, Regulatory Overview, Recent Developments |

| Regions Covered | North America, Europe, Asia-Pacific, South America, Middle East & Africa |

| Key Players Analyzed | NVIDIA Corporation, Advanced Micro Devices (AMD), Hewlett Packard Enterprise (HPE), Intel Corporation, Amazon Web Services (AWS), Google LLC, Microsoft Corporation, Super Micro Computer, Inc., Dell Technologies, Huawei Technologies, Qualcomm |

| Customization & Pricing | Available on Request (10% Customization Free) |