Reports

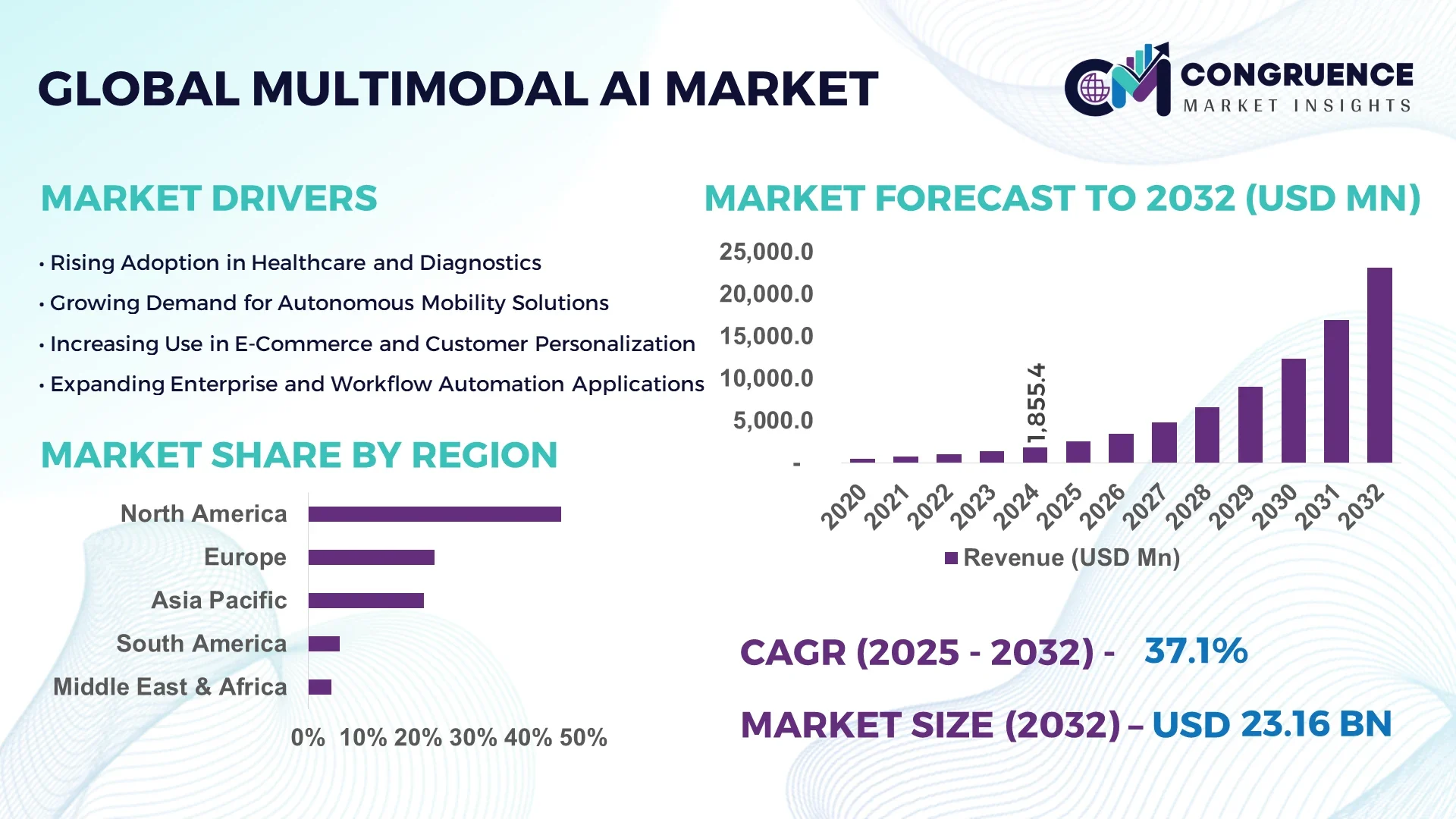

The Global Multimodal AI Market was valued at USD 1,855.4 Million in 2024 and is anticipated to reach a value of USD 23,159.9 Million by 2032, expanding at a CAGR of 37.1% between 2025 and 2032. This rapid growth is driven by the convergence of vision, speech, and language models in enterprise-scale applications.

The United States leads the Multimodal AI market, supported by extensive R&D investments exceeding USD 3.2 Billion in 2024, particularly in healthcare diagnostics, autonomous systems, and advanced customer experience platforms. More than 47% of U.S. enterprises adopted multimodal AI for operational efficiency, while patent filings in neural architecture optimization grew by 28% year-on-year, demonstrating strong technological leadership.

Market Size & Growth: Valued at USD 1,855.4 Million in 2024, projected to reach USD 23,159.9 Million by 2032 at a CAGR of 37.1%, fueled by rapid cross-industry adoption.

Top Growth Drivers: 54% adoption in healthcare, 39% efficiency improvement in logistics, 41% productivity gain in enterprise applications.

Short-Term Forecast: By 2028, AI-driven multimodal systems expected to cut processing costs by 32% while enhancing model accuracy by 45%.

Emerging Technologies: Integration of transformer-based architectures, multimodal large language models, and edge-AI systems for real-time analytics.

Regional Leaders: North America projected at USD 9,240 Million by 2032 with strong enterprise adoption, Europe forecasted at USD 5,745 Million focusing on regulations, Asia Pacific reaching USD 6,140 Million with mobile-first AI use.

Consumer/End-User Trends: Widespread deployment in e-commerce, telemedicine, automotive driver-assist systems, and immersive content platforms.

Pilot or Case Example: In 2026, Japan’s automotive sector reduced downtime by 34% using multimodal AI-powered predictive systems.

Competitive Landscape: Market led by OpenAI with ~17% share, followed by Google DeepMind, Microsoft, IBM, and Baidu actively innovating.

Regulatory & ESG Impact: AI ethics frameworks and carbon-neutral AI initiatives accelerating responsible adoption across regulated industries.

Investment & Funding Patterns: USD 6.8 Billion invested in 2023, with rising venture capital flows into AI-enabled healthcare and robotics startups.

Innovation & Future Outlook: Advancements in cross-lingual multimodal AI and human-centric interfaces position the sector as a transformative enabler of digital economies.

The Multimodal AI market is witnessing significant adoption across healthcare, automotive, retail, and telecom industries, driven by enhanced natural interfaces, regulatory frameworks supporting innovation, and a surge in personalized user-centric AI applications.

The strategic relevance of the Multimodal AI Market lies in its ability to unify text, speech, vision, and sensor data into integrated decision-making frameworks, creating measurable business outcomes across multiple industries. Enterprises increasingly deploy multimodal AI to streamline customer engagement, enhance automation, and optimize operational efficiency. For instance, multimodal deep learning models deliver 47% improvement compared to unimodal NLP-based frameworks in contextual accuracy. Regional benchmarks indicate North America dominates in volume, while Asia Pacific leads in adoption with 61% of enterprises incorporating multimodal AI into digital transformation initiatives. By 2027, real-time multimodal AI in manufacturing is projected to reduce defect rates by 38%, setting new operational standards.

ESG integration is also critical, with firms committing to 30% reduction in AI-related energy consumption by 2030 through green computing and model optimization initiatives. A practical case scenario emerged in 2025, when a leading European telecom achieved 42% improvement in call resolution efficiency by implementing multimodal AI-powered customer support systems. These measurable advancements position the Multimodal AI Market not only as a technological enabler but also as a pillar of resilience, compliance, and sustainable digital growth for the global economy.

The Multimodal AI Market is evolving rapidly due to innovations in deep learning models, adoption of natural user interfaces, and demand for intelligent automation across diverse industries. Enterprises are investing in scalable AI infrastructures that integrate visual, auditory, and textual data streams for advanced analytics and decision-making. Increasing reliance on multimodal AI for applications in healthcare imaging, autonomous vehicles, retail personalization, and immersive media experiences is shaping new growth avenues. Regulatory compliance frameworks, coupled with environmental sustainability goals, are also influencing strategic adoption patterns globally.

The rising enterprise demand for automation is reshaping the Multimodal AI Market by enhancing efficiency in operations and decision-making. Over 52% of Fortune 500 companies integrated multimodal AI into their workflows in 2024, resulting in improved productivity and faster customer response times. Healthcare providers use multimodal AI for clinical diagnostics with 46% accuracy improvements, while logistics firms have cut delivery delays by 29% through AI-enabled predictive systems. The acceleration of automated decision-making across critical industries underlines the significance of multimodal AI as a transformative force in enterprise automation.

Data complexity remains a key restraint in the Multimodal AI Market, with enterprises facing challenges in integrating large-scale heterogeneous data sources. Models require structured training datasets, yet nearly 40% of organizations struggle with fragmented, unlabelled multimodal inputs. This results in higher costs for data engineering and limits the scalability of multimodal systems. Furthermore, ensuring interoperability across global ecosystems demands specialized infrastructure, increasing technical barriers. Concerns over data privacy and algorithmic bias also slow adoption, particularly in healthcare and government applications where ethical compliance is mandatory.

Personalized user engagement offers significant opportunities for the Multimodal AI Market, as enterprises shift toward intelligent customer-centric platforms. Multimodal systems enable tailored experiences by combining voice, vision, and behavioral inputs. In retail, 62% of consumers prefer AI-driven personalized interactions, while e-commerce companies using multimodal AI report 35% increases in conversion rates. Emerging opportunities include adaptive education systems, interactive healthcare assistants, and immersive AR/VR applications, all benefiting from real-time multimodal analysis. These developments create high-value growth avenues for industry players aiming to enhance consumer experience through innovation.

High infrastructure costs present a major challenge for Multimodal AI adoption, as organizations need advanced GPUs, data centers, and energy-intensive training pipelines. Implementing large-scale multimodal models can raise IT expenditure by over 42% compared to unimodal frameworks. Small and mid-sized enterprises struggle with limited budgets, restricting their ability to deploy full-scale solutions. Additionally, compliance requirements for secure data storage and ethical AI governance further add to cost burdens. These financial and technical barriers slow down mass-market adoption, creating disparities in deployment across regions and industries.

Adoption of Multimodal Large Language Models (MLLMs): By 2025, over 48% of enterprises are expected to deploy multimodal LLMs for enhanced customer support, delivering up to 44% improvement in response accuracy and boosting cross-channel engagement.

Integration of Edge AI for Real-Time Decision-Making: Deployment of multimodal AI at the edge is forecast to reduce latency by 37% and improve data processing efficiency by 32% in industrial IoT and autonomous mobility by 2027.

Rising AI-Powered Healthcare Diagnostics: Healthcare adoption of multimodal AI is expected to enable 51% faster image-based diagnostic processing and reduce false negatives by 29% in oncology and cardiology applications by 2028.

Expansion in Immersive Media & AR/VR Platforms: Multimodal AI is projected to drive 62% growth in interactive AR/VR applications by 2030, enabling real-time emotion recognition and adaptive experiences in gaming, education, and entertainment.

The Multimodal AI Market is segmented by type, application, and end-user industries, each playing a pivotal role in shaping overall adoption trends. By type, vision-language models, audio-text systems, and video-language models dominate technological preferences, with vision-language currently leading adoption. Applications include healthcare diagnostics, autonomous driving, e-commerce personalization, and enterprise workflow automation, with healthcare showing the highest penetration. End-users span across healthcare, retail, automotive, finance, telecom, and education, with healthcare and retail at the forefront of integration. The segmentation demonstrates how consumer behavior, regulatory pressures, and industry-specific digital transformation initiatives are collectively fueling adoption of multimodal AI across global markets.

Vision-language models currently account for 42% of adoption, making them the leading segment due to their widespread application in image captioning, visual question answering, and document processing. Audio-text systems follow with 25% share, extensively used in call center automation and speech analytics. However, video-language models are experiencing the fastest growth, projected to surpass 30% adoption by 2032 as demand increases in media streaming, surveillance, and education sectors. Other types, such as sensor-fusion models and gesture-recognition AI, collectively contribute around 18%, primarily serving niche industrial and robotics applications.

Healthcare diagnostics leads the Multimodal AI Market by application, accounting for 39% share due to its critical role in integrating radiology imaging with patient records to improve diagnostic accuracy. Autonomous driving and mobility solutions follow with 27% share, powered by multimodal perception systems that combine vision, lidar, and language instructions. E-commerce personalization is the fastest-growing application, with projected CAGR above 32%, fueled by retailers adopting AI-driven product recommendations, visual search, and multimodal chatbots. Enterprise workflow automation, customer experience platforms, and immersive media applications together hold a combined 26% share.

In 2024, more than 38% of enterprises globally piloted multimodal AI systems for customer experience enhancement. Additionally, 42% of hospitals in the US tested models combining medical imaging with electronic health records for better clinical outcomes.

Healthcare emerges as the leading end-user sector in the Multimodal AI Market, accounting for 34% share, driven by diagnostic imaging, predictive analytics, and patient monitoring applications. Retail follows with 22%, leveraging multimodal AI for product personalization and customer engagement. Automotive stands at 18%, heavily investing in multimodal driver assistance and safety technologies. Finance and telecom sectors combined hold 16%, adopting multimodal AI for fraud detection, voice-enabled banking, and cross-channel customer service.

Retail is the fastest-growing end-user, with projected CAGR above 29%, supported by increasing reliance on AI chatbots and multimodal recommendation engines. In 2024, over 60% of Gen Z consumers reported higher trust in brands deploying AI-driven multimodal interactions. Similarly, more than 38% of enterprises worldwide implemented or tested multimodal AI in customer-facing platforms.

North America accounted for the largest market share at 46% in 2024, however, Asia Pacific is expected to register the fastest growth, expanding at a CAGR of 38.5% between 2025 and 2032.

Europe followed with 29% share, supported by regulatory and industrial demand. South America represented 11%, with strong potential in media localization and fintech adoption, while the Middle East & Africa held 7%, driven by investments in smart cities and digital healthcare. Regionally, enterprise adoption, funding levels, and government incentives are shaping consumption, with Asia Pacific’s rapid urbanization and mobile penetration expected to push it ahead as the leading growth hub by 2032.

North America held 46% share of the global Multimodal AI Market in 2024, led by high adoption across healthcare, finance, and defense sectors. The region benefits from strong government support, including AI ethics regulations and R&D funding exceeding USD 2.5 Billion in 2024. Healthcare and finance remain top adopters, with over 42% of hospitals piloting multimodal AI for diagnostics and 39% of banks using AI-driven fraud detection. Companies such as OpenAI are headquartered here, advancing multimodal large language models for enterprise use. Consumer behavior is marked by strong trust in AI-enabled digital services, particularly in telemedicine and personalized e-commerce.

Europe represented 23% of the global Multimodal AI Market in 2024, with Germany, the UK, and France as key markets. Strict regulations from the EU’s AI Act have created demand for explainable AI models, especially in finance and healthcare. Enterprises are rapidly integrating multimodal AI for compliance and transparency. Sustainability initiatives, such as carbon-neutral AI data centers, also play a major role in adoption. Regional startups in France are focusing on AI for immersive education and language translation, expanding consumer use cases. European consumers demand explainability, with more than 55% of enterprises prioritizing transparent multimodal AI deployments.

Asia Pacific accounted for 21% share in 2024 and is forecasted as the fastest-growing regional market by 2032. China, Japan, and India lead adoption, with strong investment in AI-enabled manufacturing, e-commerce, and education. More than 61% of enterprises in Asia Pacific integrated multimodal AI in customer engagement systems by 2024. China invests heavily in multimodal robotics, while India is scaling AI-powered healthcare diagnostics. Japan is a hub for automotive multimodal applications. Regional consumers adopt mobile-first AI solutions, with over 68% of e-commerce platforms deploying multimodal search and recommendation tools for personalized shopping.

South America captured 5.8% market share in 2024, with Brazil and Argentina as primary contributors. Local adoption is rising in media, entertainment, and language localization due to diverse linguistic needs. Governments are incentivizing AI integration in public services, with Brazil allocating significant funds to digital transformation initiatives. A local Brazilian startup developed multimodal AI-powered translation for online streaming platforms, improving accessibility for millions of users. Consumer adoption is strong in digital media, with 58% of younger consumers preferring localized AI-driven platforms for entertainment.

The Middle East & Africa accounted for 4.2% of global share in 2024, with the UAE, Saudi Arabia, and South Africa leading investments. The region is witnessing strong adoption in oil & gas, construction, and government digital transformation projects. The UAE’s AI strategy emphasizes multimodal AI in healthcare and urban mobility, while South Africa focuses on AI-enabled education platforms. Consumer behavior reflects preference for mobile-first services, with over 51% of enterprises in the region adopting multimodal AI-powered customer service by 2024. Local players are collaborating with global AI firms to develop hybrid infrastructure models supporting smart city initiatives.

United States – 27% share: Dominance driven by high R&D spending, strong enterprise adoption in healthcare and finance, and presence of global AI leaders.

China – 19% share: Leadership supported by large-scale government funding, rapid adoption in manufacturing, e-commerce, and strong innovation in robotics and automotive applications.

The competitive environment in the Multimodal AI Market is moderately fragmented with 20-30 active major competitors globally, although the top 5 firms collectively hold about 55-65% combined share in terms of innovation leadership, model capability, and enterprise adoption. Key players such as Google, Microsoft, OpenAI, Meta, and IBM dominate through large-scale infrastructure, large training datasets, and cross-industry platform integrations. Strategic initiatives in recent years include partnerships (for example, cloud providers teaming with AI startups for edge inference), product launches of multimodal large language models, and collaborations combining vision, audio, and sensor modalities.

Innovation trends include modular model architectures, parameter-efficient adaptation, foundation models capable of many modalities (text, image, video, audio), and increasing focus on explainability. Some leading competitors are investing heavily to reduce inference latency and energy consumption. For example, firms have introduced optimized inference pipelines, quantization, and compact model variants to address deployment constraints. In product launches, Meta’s Llama 3.2 added visual processing and voice capabilities, enabling use on mobile devices. Another example: infrastructure-specialist companies are supplying tailored hardware or cloud services optimized for multimodal workloads.

Market positioning varies: Google and Microsoft are seen as premier providers across platform, model, and infrastructure layers; OpenAI competes strongly in model performance and community adoption; Meta emphasizes open-source or semi-open models with strong multimodal integrations; IBM and NVIDIA lean into enterprise, hardware acceleration, and specialized use cases. Competitive pressure is also increasing from newer entrants which focus on specific modalities or regions, driving niche innovation. Overall, competition is pushing rapid improvements in model efficiency, multimodal capabilities, deployment speed, and adaptation to regulated or low-resource settings.

Microsoft

Meta

IBM

Twelve Labs, Inc.

Jina AI

Uniphore Technologies

Aimesoft

In current technology stacks, foundation models that integrate vision, audio, text, and video modalities are a core focus. These multimodal large language models (MLLMs) are being tuned for cross-modal alignment, so that features extracted from images, text, audio, and sensor data can be jointly reasoned over. Techniques such as visual instruction tuning (e.g., connecting vision encoders to large language backbones with instruction-following data) are showing high relative performance (often above 80-90% in synthetic benchmarks) in vision-language tasks. Model compression and efficient inference are emerging as essential technologies. Developers are increasingly using quantization, pruning, adapter layers, and knowledge distillation to reduce compute and energy costs while deploying on edge devices or constrained cloud environments. Another important trend is any-to-any modality models, which can accept inputs in one or more modalities and output in another modality (e.g., image to caption, audio to video, video to text). These architectures are proving useful in media, accessibility, robotics, and surveillance.

Sensor fusion (e.g., combining lidar, radar, camera) is crucial in autonomous systems and robotics; improvements in signal alignment, temporal consistency, and spatial registration are being actively researched. Also, multimodal semantic communication—using AI to encode, transmit, and decode semantically rich multimodal signals over constrained channels—is becoming more relevant in IoT, telecommunications, and remote sensing environments. Another innovation area is generalist foundation models tailored to specialized sectors. For example, a model called EyeFound was trained on 2.78 million images across 227 hospitals covering 11 ophthalmic imaging modalities, enabling a generalist retina-imaging solution with fewer labels, multi-task capabilities, and zero-shot visual question answering.

Emerging tech includes robust methods for alignment across modalities (to handle noisy or weakly labelled data), better human-in-the-loop tools for ethical / explainability concerns, deployment tools for real-time multimodal analytics, and hybrid cloud-edge architectures optimized for multimodal workloads. Decision-makers must monitor these technologies for strategic investment and competitive differentiation.

• In October 2024, Waymo introduced EMMA (End-to-End Multimodal Model for Autonomous Driving), a model based on Google’s Gemini, which processes sensor data to predict trajectories and improve obstacle avoidance in robotaxi operations. Source: www.theverge.com

• In late 2024, Meta released Llama 3.2, its first free multimodal model version capable of visual input and celebrity voice features, and optimized for mobile deployment—enhancing user engagement through image and voice modalities in devices and AR/VR use cases. Source: www.wired.com

• In 2025, AI infrastructure company fal raised USD 125 million in a Series C funding round, valuing it at USD 1.5 billion, to scale multimodal capabilities for enterprise clients, especially to handle video, image, and audio models at large scale. Source: www.reuters.com

• In May 2025, Tedial and Moments Lab partnered to integrate the MXT-2 multimodal AI indexing technology into Tedial’s EVO MAM platform. The integration enables automatic video-content analysis including identifying people, actions, locations, and selecting sound bites to streamline media asset workflows. Source: www.tvtechnology.com

This Multimodal AI Market Report encompasses all major product types (vision-language, audio-text, video-language, sensor-fusion, gesture/behavior recognition) and application domains (healthcare diagnostics, autonomous mobility, e-commerce personalization, enterprise automation, immersive media, public services). It covers end-user sectors such as healthcare, finance, automotive, retail, telecom, education, government, and media. Geographically, the report includes detailed insights for North America, Europe, Asia-Pacific, South America, and Middle East & Africa, and profiles leading countries globally. The technology focus spans foundational architectures, multimodal large language models, efficient inference (edge, cloud, hybrid), sensor fusion, semantic communication, visual instruction tuning, and domain-specific generalist models.

Industry focus also includes regulated sectors (healthcare, finance), media & entertainment, automotive, e-commerce, and public service infrastructures. Niche segments such as ophthalmology imaging, AR/VR education platforms, video indexing, gesture recognition, and accessibility tools are addressed. The report assesses key strategic initiatives (partnerships, product launches, R&D investment), technological constraints (data heterogeneity, latency, compute resources), regulatory and ESG factors (ethics, explainability, sustainability), and market deployment challenges. It provides quantitative data on number of competitive players, innovation outputs, deployment examples, and readiness levels across regions, intended to support decision-makers evaluating investment, product development, or competitive positioning strategies.

| Report Attribute/Metric | Report Details |

|---|---|

Market Revenue in 2024 | USD 1,855.4 Million |

Market Revenue in 2032 | USD 23,159.9 Million |

CAGR (2025 - 2032) | 37.1% |

Base Year | 2024 |

Forecast Period | 2025 - 2032 |

Historic Period | 2020 - 2024 |

Segments Covered | By Type

By Application

By End-User Industry

|

Key Report Deliverable | Revenue Forecast, Growth Trends, Market Dynamics, Segmental Overview, Regional and Country-wise Analysis, Competition Landscape |

Region Covered | North America, Europe, Asia-Pacific, South America, Middle East, Africa |

Key Players Analyzed | Google, Microsoft, OpenAI, Meta, IBM, Amazon Web Services, Inc., Twelve Labs, Inc., Jina AI, Uniphore Technologies, Aimesoft |

Customization & Pricing | Available on Request (10% Customization is Free) |