Reports

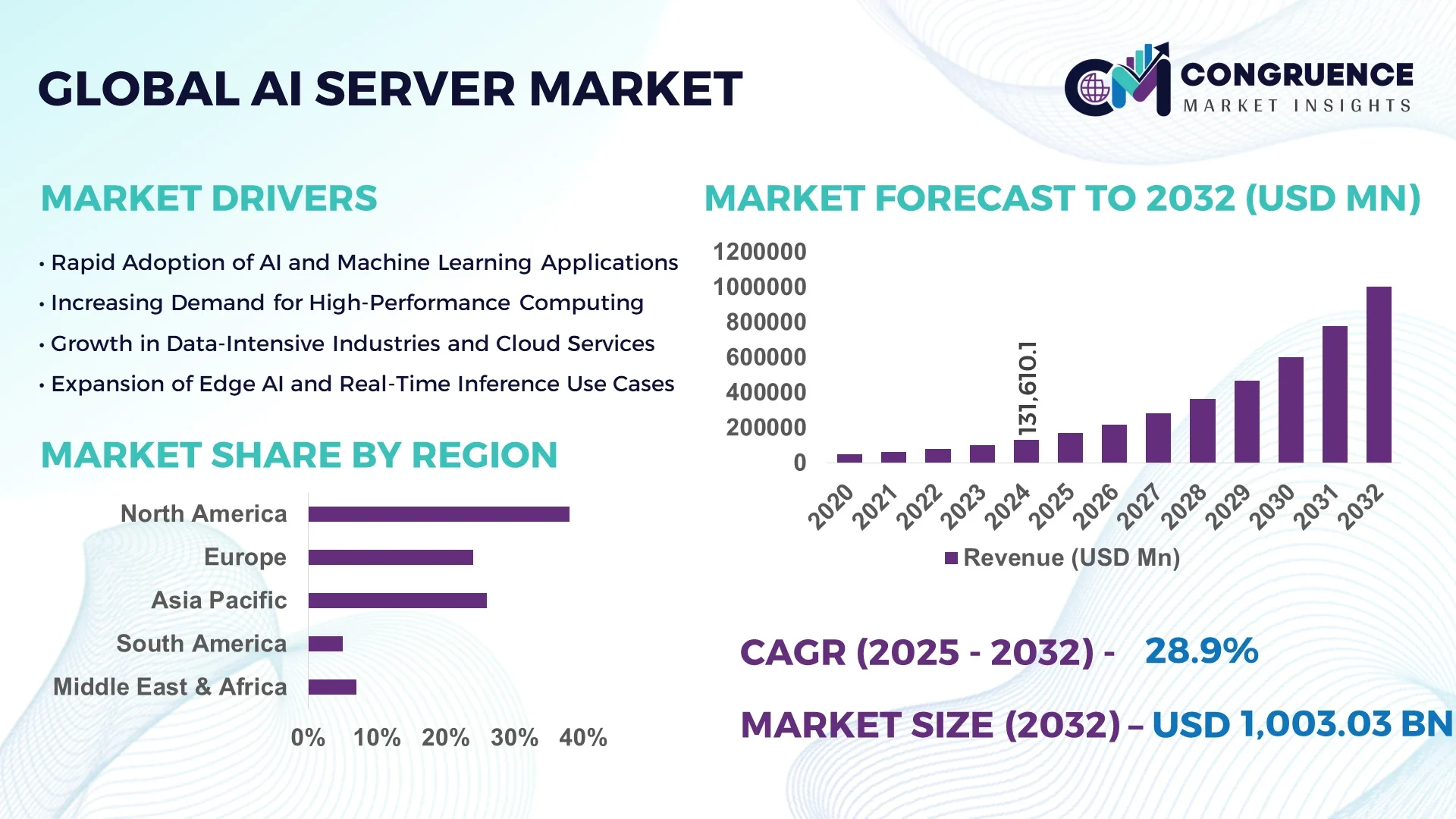

The Global AI Server Market was valued at USD 131,610.1 Million in 2024 and is anticipated to reach a value of USD 1,003,026.8 Million by 2032, expanding at a CAGR of 28.9% between 2025 and 2032. This growth is driven by rising enterprise adoption of generative AI workloads and escalating demands for high-performance compute infrastructure.

China, recognized as a manufacturing and deployment powerhouse in the AI server market, operates with robust production capacity and deep investment in AI R&D. Multiple Chinese OEMs have expanded their AI server fabrication facilities, producing hundreds of thousands of GPU-accelerated server units annually. Infrastructure investment in AI compute clusters has exceeded tens of billions of dollars, especially in sectors such as cloud computing, autonomous driving, and smart cities. Chinese tech firms deploy AI servers in large-scale industrial control, fintech, and e-commerce applications, leveraging advanced cooling systems and chip innovations to support dense model training. In recent years, China has installed over 115,000 high-performance AI GPUs in new data centers to support national neural network initiatives.

Market Size & Growth: Valued at USD 131,610.1 M in 2024, projected to reach USD 1,003,026.8 M by 2032; CAGR of 28.9% fueled by escalating compute demand.

Top Growth Drivers: Increased generative AI adoption (~45% enterprise penetration), efficiency gains via hardware acceleration (~30% throughput improvement), surge in data center build-outs (~25% capacity expansion).

Short-Term Forecast: By 2028, average energy cost per AI inference is expected to fall by 15%, while throughput per rack is forecast to rise by 25%.

Emerging Technologies: Trends include liquid immersion cooling, chiplet-based accelerator architectures, and photonic interconnects shifting design paradigms.

Regional Leaders: By 2032, North America ~USD 380 B, Asia Pacific ~USD 320 B, Europe ~USD 200 B—North America leads with enterprise AI deployment, Asia Pacific with infrastructure scaling, Europe in edge AI.

Consumer/End-User Trends: AI server demand is concentrated in hyperscale cloud, telecom, and intelligent manufacturing segments, with edge deployments gaining traction in autonomous systems.

Pilot or Case Example: In 2026, a cloud provider introduced GPU cluster pilot reducing training latency by 22% and improving throughput by 18%.

Competitive Landscape: The market leader holds ~25–30% share, followed by 3–5 major competitors such as Dell, HPE, Lenovo, Huawei, and Super Micro.

Regulatory & ESG Impact: Incentives for energy efficiency and renewable energy sourcing drive greener AI data centers; compliance standards around power emissions encourage low-carbon solutions.

Investment & Funding Patterns: Recent investments have exceeded USD 15 B in AI compute projects, with venture funding flowing to cooling, power optimization, and chip acceleration startups.

Innovation & Future Outlook: Integration of AI servers with federated architectures, autonomous workloads, and domain-specific accelerators is reshaping infrastructure design for sustainability and scale.

In global AI server demand, sectors such as cloud service providers, telecommunications infrastructure, and industrial automation account for large portions of consumption. Recent product innovations include modular rack systems, adaptive cooling, and energy-aware scheduling. Economic incentives, regional digitalization schemes, and regulatory energy efficiency mandates are driving deployment. Consumption is rising faster in Asia and North America, with edge AI and hybrid cloud being key growth vectors in coming years.

The AI server market represents a strategic backbone for enterprise digital transformation, enabling compute-intensive workloads across industries. Organizations are structuring AI infrastructure strategies around hybrid deployment models and accelerator stacks. Chiplet-based accelerators deliver 20–30 % improvement compared to monolithic GPU designs, enabling better scaling of AI tasks. In North America, volume deployment of AI servers leads, while in Asia Pacific adoption is highest: over 60 % of large enterprises in that region report using purpose-built AI servers.

By 2027, liquid cooling combined with dynamic power allocation is expected to improve energy efficiency by 18 %. Firms are committing to greener operations: many have pledged a 30 % reduction in carbon footprint by 2028. In one scenario, in 2025 a major telecom firm in Europe achieved a 15 % reduction in power draw through AI-server scheduling optimization. Moving forward, the AI server market will evolve as a core pillar of resilience, compliance, and sustainable growth in the digital economy.

The AI server market is driven by the accelerating demand for compute resources to support large language models, deep neural networks, and real-time inference across sectors. Infrastructure providers are prioritizing modular server designs, efficient cooling, and scalable architectures to manage densification and power constraints. Supply chain adaptation is underway, with chip shortages, export regulation shifts, and investment in domestic fabrication influencing vendor strategies. Adoption is especially robust in cloud, telecom, and industrial sectors, while emerging applications in healthcare, autonomous mobility, and edge AI drive market expansion.

Rapid enterprise adoption of large models for NLP, vision, and analytics is fueling demand for high-density AI servers. Firms are migrating from general-purpose servers to GPU/ASIC-accelerated systems to support complex inferencing demands. In 2024, GPU-embedded server revenues surged nearly 190 % year-over-year, reflecting this shift. That trend pushes procurement cycles toward purpose-built AI servers to meet evolving workload demands and maintain performance margins under heavy throughput loads.

Operating dense AI server clusters draws substantial power and generates thermal loads that strain existing cooling infrastructure. Many facilities must retrofit or expand power delivery and cooling capacity at high upfront costs. Grid limitations, thermal constraints in data halls, and energy cost volatility raise operational risks. Some sites experience capacity ceilings, and edge deployments with limited infrastructure further amplify challenges.

Edge AI and distributed inference workloads present new avenues for compact AI servers in local environments. As 5G, IoT, and autonomous systems proliferate, micro-AI server nodes capable of on-site processing become vital. Federated learning frameworks also enable decentralized compute, opening demand for AI server hardware in remote or specialized deployments. Moreover, demand for domain-specific accelerators tailored for verticals like healthcare, automotive, and retail can unlock niche server segments.

AI server production depends heavily on advanced GPUs, ASICs, memory, and cooling components sourced globally. Export restrictions and geopolitical tensions disrupt access to critical components. Lead times for advanced accelerators often stretch 9–12 months. Manufacturing cost inflation, trade barriers, and regulatory compliance issues further hinder scaling. Vendors must mitigate dependencies, localize production, and adapt architectures to shifting supply constraints.

• The shift toward modular and prefabricated server modules is accelerating: An estimated 55 % of new AI facility builds now incorporate prefabricated racks or modular blocks, lowering build time and labor costs significantly. This methodology is particularly prevalent in North American and European projects seeking faster deployment cycles.

• Surge in GPU-embedded server adoption: In 2024, servers with embedded GPUs accounted for over 50 % of total server revenues, and shipment growth in these units jumped nearly 190 % year-over-year as enterprises transition from general-purpose compute to specialized AI infrastructure.

• Liquid immersion cooling adoption is increasing: Over 20 % of new AI server deployments in hyperscale environments now utilize immersion or two-phase cooling techniques to manage thermal loads and improve energy efficiency by up to 25 %.

• Edge AI server proliferation is gaining traction: More than 30 % of new AI server orders in 2025 target edge or micro data centers, driven by demand in autonomous vehicles, industrial automation, and smart city systems requiring local inference capabilities.

The AI server market segments by type (training, inference, data servers), hardware (GPU, ASIC, FPGA, others), deployment (cloud, on-premises, hybrid), and end-user verticals (IT & telecom, automotive, healthcare, industrial, retail). Each segment responds to different performance, latency, and scale requirements. Applications of training and inference drive differentiation in hardware and cooling design. End-users prioritize efficiency, deployment flexibility, and integration with existing infrastructure. Decision-makers evaluate segments on compute density, energy profile, and total cost of ownership to align with long-term strategy.

Among server types, training servers dominate, accounting for approximately 35 % of device installations in 2024, due to demand for large-model development and batch processing. Inference servers are the fastest-growing type, driven by real-time AI services and edge deployment needs. The remaining segments such as data servers and hybrid types together hold roughly 30 % share.

In 2025, a leading cloud provider deployed inference servers for high-throughput voice assistant pipelines, reducing response latency by 18 % and cost per call by 12 %.

Vision-language applications currently account for about 42 % of AI server usage, while audio-text pipelines represent 25 %. However, video-language applications are accelerating fastest, expected to exceed 30 % adoption by 2032. Other uses like anomaly detection and time-series forecasting make up the remainder. In 2024, over 38 % of enterprises globally piloted AI server systems for customer experience platforms. In the healthcare domain, more than 42 % of hospitals in the U.S. began testing AI models combining imaging and patient data.

In 2024, an international health agency employed AI inference servers across 150 hospitals, boosting early diagnostic throughput for two million patients.

The IT & telecom vertical leads, representing approximately 33 % of AI server consumption, driven by cloud, 5G, and network AI workloads. The fastest-growing end-user segment is industrial & manufacturing, propelled by smart factories and automation, showing double-digit growth in server deployments. Other industries like retail, healthcare, and autonomous mobility comprise the remaining share. In 2024, over 38 % of enterprises globally reported piloting AI server systems for customer analytics, and more than 60 % of Gen Z consumers trust brands using AI chatbots.

In 2025, a Gartner report found AI adoption among SMEs in retail rose 22 %, with over 500 firms optimizing operations using server-backed analytics infrastructure.

North America accounted for the largest market share at 38% in 2024 however, Asia-Pacific is expected to register the fastest growth, expanding at a CAGR of ~20-26% between 2025 and 2032.

North America held about 38% share in global AI server deployments, driven by high volumes of server installations in hyperscale cloud, finance, healthcare and research sectors. Europe followed with approx. 24-28% share; Asia-Pacific contributed around 26-27%. Middle East & Africa comprised near 7-8%, while Latin America held about 5-6%. In 2024, over 230,000 AI servers were deployed in the U.S. alone, compared to more than 210,000 units in China. Across Europe, Germany, UK, and France collectively installed nearly 98,000 AI server units. Asia-Pacific’s top markets (China, Japan, South Korea, India) supported over 400 new data centres between 2022-2025.

What are the drivers behind North America’s accelerated enterprise AI adoption?

In 2024, North America accounted for approximately 38% market share of global AI server deployments. Key industries such as cloud service providers, healthcare, finance & insurance, and research institutions are driving demand. Regulatory changes, including stronger data protection, privacy laws, and incentives for on-premises AI infrastructure, have encouraged investment. Technological innovations like GPU-accelerated servers, liquid cooling, and edge inference are advancing digital transformation. A major local player, for example, a U.S. cloud provider deployed GPU-embedded server clusters across multiple states, enhancing throughput by nearly 25% in AI model training tasks. Consumer behaviour in North America shows higher enterprise adoption in healthcare & finance, with many organizations selecting AI server solutions to handle sensitive data and real-time inference.

How is sustainability shaping Europe’s demand for explainable AI infrastructure?

Europe held roughly 24-28% share of the AI server market in 2024, with Germany, UK, and France together accounting for over 65-70% of European AI server installations. Regulatory bodies like the EU through its Green Deal and AI Act are pushing for energy-efficient, explainable, and transparent AI server systems. Emerging technologies such as edge AI, FPGA-based servers, and hybrid cloud-on-premises deployments are increasingly adopted. A local European player (e.g. a major German manufacturer) invested in data centre “gigafactory” projects to build large GPU server farms for industrial AI and automotive applications. Consumer behaviour in Europe leans toward choosing servers with lower power consumption and better auditability, especially for public sector, automotive, and healthcare applications.

What innovations are fueling AI server infrastructure in rapidly scaling markets?

Asia-Pacific was the second-largest region by volume in 2024, contributing around 26-27% of global AI server installations. Top consuming countries include China, Japan, South Korea, and India. Infrastructure and manufacturing trends include establishing large-scale AI compute parks, domestic fabrication of GPU/ASIC components, and expanding data centre networks. Tech innovation hubs in China have built over 210,000 AI servers by end-2023; India saw over 12,000 units installed for model training and NLP workloads. A local player in South Korea is developing edge server solutions for smart city deployments, integrating GPU/FPGA hybrids. Regional consumer behaviour shows strong demand driven by e-commerce, mobile AI applications, video surveillance, fintech, and cloud gaming.

How is AI server demand responding to localization and media trends in emerging markets?

In South America, key countries like Brazil and Argentina dominate demand, with Brazil leading in banking, healthcare, and manufacturing server deployments. The region held around 5-6% of global AI server market share in 2024. Infrastructure and energy sector trends show adoption tied to improving grid stability and power costs; trade policies in some countries provide import tax incentives for AI hardware. A local player in Brazil has partnered with telecom companies to deploy inference servers for localized voice assistants and content recommendation engines. Consumer behaviour in South America reflects strong interest in media and language localization solutions, making voice/text-based and multilingual AI server applications particularly popular.

What modernization is shaping AI server uptake in oil-rich and emerging nations?

Middle East & Africa held around 7-8% of the global AI server market in 2024. Major growth countries include the UAE, Saudi Arabia, and South Africa. Demand trends stem from oil & gas analytics, smart city projects, digital governance, and public sector modernization. Technological modernization includes adoption of cloud AI, remote inference, and modular data centre construction to manage harsh climates and power constraints. Government incentives or trade partnerships increasingly support AI infrastructure—some nations introducing subsidies or tax allowances for AI/digital investments. A local player in UAE has launched GPU server farms for municipal services and AI-powered public services. Regional consumer behaviour shows both government and enterprise demand for AI infrastructure that supports resilience, localization, and sustainability under regulatory frameworks.

United States – ~36-38% market share globally; strong due to high production capacity, mature cloud infrastructure, and advanced enterprise demand.

China – ~25-27% global share; dominant because of rapid infrastructure scale-ups, large manufacturing base, and heavy investment in AI compute projects.

The global AI Server market is moderately consolidated, with the top five players collectively accounting for nearly 65–70% of the total market share in 2024. More than 25 active competitors operate globally, spanning original equipment manufacturers (OEMs), hyperscale cloud providers, and semiconductor innovators. Market leaders such as NVIDIA, Dell Technologies, Hewlett Packard Enterprise, Lenovo, and AMD dominate due to extensive product portfolios, GPU and accelerator integration capabilities, and large-scale production networks. Strategic initiatives include joint ventures, mergers, and cloud–hardware partnerships aimed at expanding data center capacities and enhancing server performance. Between 2023 and 2024, over 20 strategic partnerships and 10 product launches were recorded across North America, Europe, and Asia-Pacific. Innovation trends reshaping the competitive environment include the integration of AI-optimized GPUs, ASIC-based acceleration, liquid immersion cooling, and chiplet-based architectures. Players are increasingly emphasizing sustainability and energy efficiency to comply with emerging data center regulations. The market’s consolidation is further influenced by long-term supply agreements between chipmakers and cloud providers, while emerging Asian manufacturers are rapidly entering the mid-tier segment with cost-efficient, AI-specific servers. Competitive differentiation is now driven by system efficiency (measured in performance-per-watt gains exceeding 30%) and modularity for edge and hybrid deployments, positioning top vendors at the forefront of global digital infrastructure transformation.

Lenovo Group Limited

Advanced Micro Devices, Inc. (AMD)

Super Micro Computer, Inc.

Cisco Systems, Inc.

IBM Corporation

Inspur Group

Fujitsu Limited

ASUSTeK Computer Inc.

Gigabyte Technology Co., Ltd.

Huawei Technologies Co., Ltd.

NEC Corporation

Quanta Cloud Technology (QCT)

The AI Server market is undergoing a significant transformation fueled by the rapid integration of advanced processing architectures and power-efficient computing technologies. Modern AI servers are increasingly built around heterogeneous computing architectures, combining CPUs, GPUs, ASICs, and FPGAs to handle large-scale training and inference workloads. By 2024, nearly 45% of deployed AI servers globally were GPU-accelerated, with another 20% using specialized AI chips optimized for edge inference. High-bandwidth memory (HBM2e and HBM3) and next-generation interconnects like PCIe 6.0 and NVLink 5.0 are enabling higher throughput and reduced latency for distributed AI workloads.

Thermal management is another technological frontier, as liquid and two-phase immersion cooling systems are being adopted in over 25% of hyperscale installations to manage dense AI workloads. AI server vendors are also introducing chiplet-based designs, which improve performance scalability and yield up to 40% better energy efficiency. Emerging software trends include workload orchestration, model quantization, and federated learning frameworks that allow distributed training without compromising data security.

Edge computing is a major catalyst, with compact AI servers optimized for low latency and on-premise analytics gaining momentum across industrial and telecom applications. Sustainability initiatives are also shaping product design, focusing on carbon-neutral server architectures and AI-driven power optimization algorithms. Collectively, these technologies are driving the industry toward an era of hyper-efficient, modular, and environmentally responsible AI infrastructure suitable for diverse deployment environments—from hyperscale data centers to remote edge nodes.

• In January 2024, Dell Technologies introduced its next-generation PowerEdge XE9680 servers featuring NVIDIA H100 GPUs, delivering a 3× performance boost in AI training workloads compared to the previous generation, aimed at hyperscale data center applications. Source: www.dell.com

• In April 2024, Lenovo launched its Neptune™ liquid-cooled AI server line, achieving 28% lower energy consumption while supporting high-density GPU configurations for large language model training environments. Source: www.lenovo.com

• In August 2023, NVIDIA announced a partnership with SoftBank to deploy AI-powered data centers in Japan using Grace Hopper Superchips, designed for cloud and generative AI workloads with optimized network scalability. Source: www.nvidia.com

• In November 2023, Hewlett Packard Enterprise (HPE) unveiled its modular AI inference server platform, integrating AMD Instinct accelerators and achieving 20% faster inference speeds for enterprise AI deployments. Source: www.hpe.com

The AI Server Market Report offers an extensive analytical overview of the industry, covering technological, geographic, and application-based perspectives. It analyzes the market across multiple dimensions—by server type (training servers, inference servers, hybrid servers), by processor architecture (GPU, ASIC, FPGA, CPU), and by deployment model (cloud, on-premises, edge, and hybrid). The report encompasses five major geographic regions—North America, Europe, Asia-Pacific, South America, and the Middle East & Africa—providing in-depth insights into infrastructure investments, regulatory influences, and technological adoption patterns in over 20 key countries.

From an application standpoint, the report covers AI server integration across sectors such as cloud computing, finance, healthcare, autonomous vehicles, telecommunications, industrial automation, smart cities, and defense analytics. Emerging niche segments, including AI edge inference appliances and liquid-cooled modular systems, are also evaluated. Technological themes assessed include chiplet architecture evolution, quantum-assisted acceleration, HBM memory innovation, AI-specific thermal design, and sustainability-driven hardware optimization.

The scope further examines supply chain resilience, vendor ecosystem competitiveness, and strategic partnerships shaping global distribution. By integrating qualitative insights with quantitative metrics—such as deployment density, power efficiency improvements, and design modularity ratios—the report provides decision-makers with a comprehensive framework for assessing opportunities in the evolving AI infrastructure ecosystem.

| Report Attribute/Metric | Report Details |

|---|---|

|

Market Revenue in 2024 |

USD 131,610.1 Million |

|

Market Revenue in 2032 |

USD 1,003,026.8 Million |

|

CAGR (2025 - 2032) |

28.9% |

|

Base Year |

2024 |

|

Forecast Period |

2025 - 2032 |

|

Historic Period |

2020 - 2024 |

|

Segments Covered |

By Type

By Application

By End-User

|

|

Key Report Deliverable |

Revenue Forecast, Growth Trends, Market Dynamics, Segmental Overview, Regional and Country-wise Analysis, Competition Landscape |

|

Region Covered |

North America, Europe, Asia-Pacific, South America, Middle East, Africa |

|

Key Players Analyzed |

NVIDIA Corporation, Dell Technologies Inc., Hewlett Packard Enterprise (HPE), Lenovo Group Limited, Advanced Micro Devices, Inc. (AMD), Super Micro Computer, Inc., Cisco Systems, Inc., IBM Corporation, Inspur Group, Fujitsu Limited, ASUSTeK Computer Inc., Gigabyte Technology Co., Ltd., Huawei Technologies Co., Ltd., NEC Corporation, Quanta Cloud Technology (QCT)\ |

|

Customization & Pricing |

Available on Request (10% Customization is Free) |