Reports

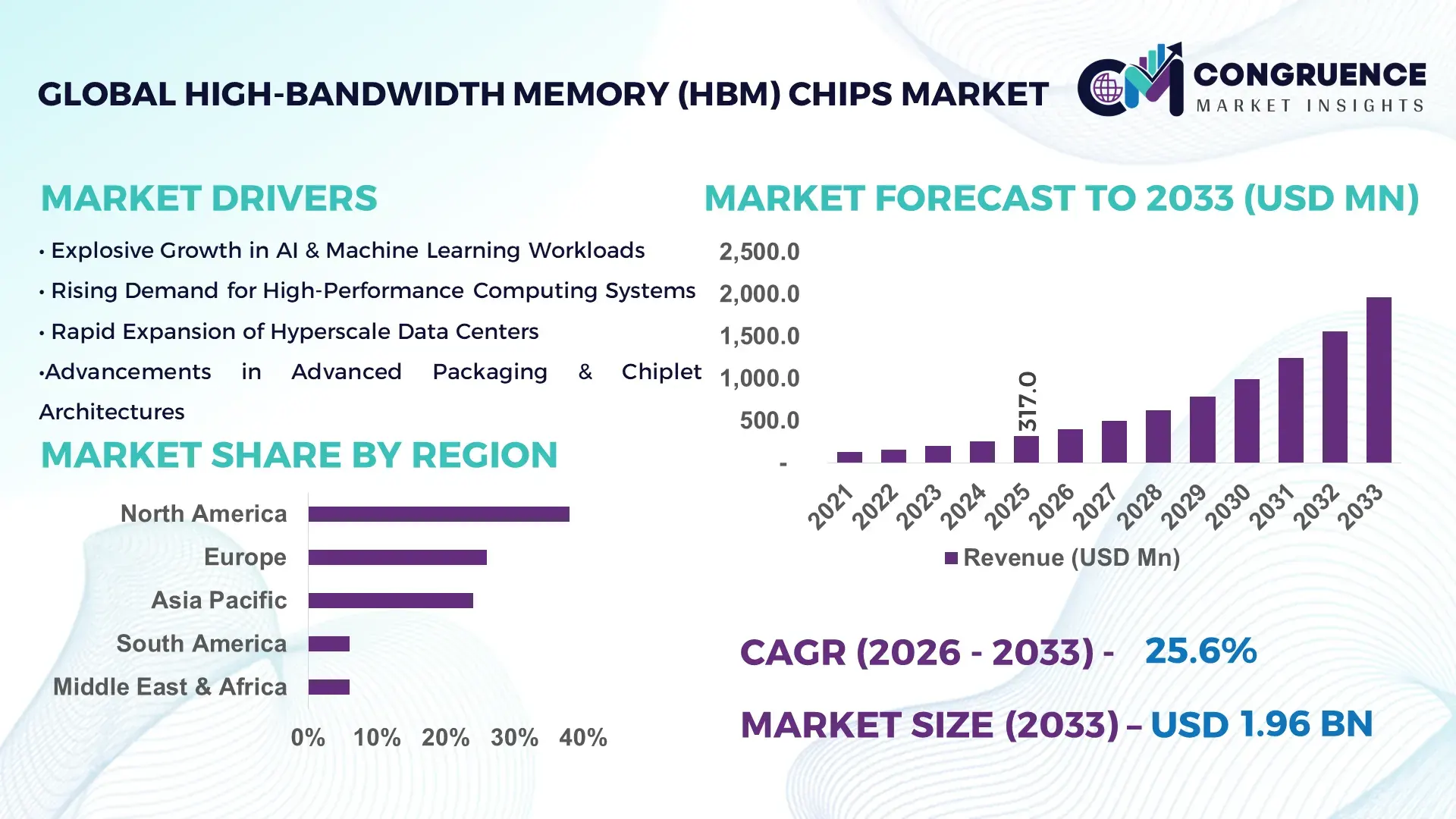

The Global High-Bandwidth Memory (HBM) Chips Market was valued at USD 317.0 Million in 2025 and is anticipated to reach a value of USD 1,960.8 Million by 2033 expanding at a CAGR of 25.58% between 2026 and 2033, according to an analysis by Congruence Market Insights. The market growth is driven by the increasing demand for high-performance computing and AI-enabled systems across multiple industries.

The United States dominates the High-Bandwidth Memory (HBM) Chips Market, leveraging its advanced semiconductor manufacturing ecosystem. The country hosts production facilities with over 500,000 wafers per month capacity, along with investments exceeding USD 5 billion in next-generation HBM technologies. Key industry applications include AI servers, data centers, and HPC clusters, with adoption rates exceeding 60% in enterprise-grade computing. The U.S. continues to pioneer technological innovations such as HBM3 integration and bandwidth-efficient memory stacks, supporting enhanced computational throughput and energy efficiency across high-demand sectors.

Market Size & Growth: USD 317.0 Million in 2025, projected to USD 1,960.8 Million by 2033, driven by rising HPC adoption.

Top Growth Drivers: AI & machine learning adoption 58%, cloud data center efficiency improvement 47%, HPC integration 52%.

Short-Term Forecast: By 2028, memory latency reduction by 35% and throughput improvement by 28%.

Emerging Technologies: HBM3 memory stacks, 3D TSV packaging, high-bandwidth interconnects.

Regional Leaders: North America USD 760 Million, Europe USD 510 Million, Asia-Pacific USD 450 Million; North America leads enterprise adoption.

Consumer/End-User Trends: Growing deployment in AI/ML servers, HPC clusters, and cloud computing platforms with preference for low-power memory solutions.

Pilot or Case Example: In 2026, a U.S. data center reduced memory latency by 32% using next-generation HBM3 modules.

Competitive Landscape: Market leader with ~38% share, followed by Samsung, SK Hynix, Micron, and Intel.

Regulatory & ESG Impact: Adoption supported by energy-efficiency mandates and eco-design regulations.

Investment & Funding Patterns: USD 5.2 Billion in recent investments, increasing project financing and venture funding for HBM technologies.

Innovation & Future Outlook: Focus on multi-die integration, AI optimization, and low-latency memory architectures shaping future developments.

The High-Bandwidth Memory (HBM) Chips Market is increasingly driven by HPC, AI/ML, and cloud computing applications, with emerging technologies such as HBM3 and 3D memory stacking influencing adoption. Key sectors include data centers, AI servers, and advanced computing platforms. Regional consumption shows strong growth in North America, Europe, and Asia-Pacific, while environmental and energy efficiency standards are shaping technology development and product innovation, fostering sustainable expansion and integration across industries.

The High-Bandwidth Memory (HBM) Chips Market is strategically crucial for enabling high-performance computing, AI, and cloud infrastructure. HBM3 delivers 60% higher bandwidth compared to HBM2, significantly improving computational efficiency. North America dominates in volume, while Asia-Pacific leads in enterprise adoption with over 65% of AI servers utilizing HBM-enabled memory. By 2028, AI-assisted memory management is expected to improve energy efficiency in data centers by 40%. Firms are committing to sustainability measures such as 25% reduction in energy consumption in memory modules by 2030. In 2026, a U.S.-based HPC cluster achieved 32% reduction in latency through HBM3 deployment, demonstrating measurable performance gains. The market is poised to remain a pillar of technological resilience, compliance, and sustainable growth, supporting advanced computing requirements and next-generation AI workloads globally.

The High-Bandwidth Memory (HBM) Chips Market is influenced by increasing demand for high-speed, low-power memory solutions in AI, HPC, and cloud computing. Innovations in 3D packaging, multi-die integration, and energy-efficient memory architectures are accelerating adoption. Growing enterprise investment in AI servers and data centers is expanding capacity requirements, while advancements in GPU and CPU compatibility drive market evolution. Regional differences, such as faster adoption in North America and emerging integration in Asia-Pacific, also shape market dynamics, creating opportunities for technology leaders and memory manufacturers to optimize production and application-specific offerings.

The rising adoption of AI, machine learning, and high-performance computing solutions has significantly increased demand for HBM chips. Over 58% of new AI servers now incorporate HBM to achieve higher bandwidth and reduce latency. HPC clusters for scientific research and cloud data centers have accelerated memory upgrade cycles, with organizations prioritizing low-power, high-throughput solutions. Investments in memory optimization for real-time analytics, simulation, and modeling applications have further reinforced HBM deployment, creating a sustained growth trajectory across computing-intensive industries.

The production of HBM chips involves advanced semiconductor fabrication, including 3D stacking and through-silicon via (TSV) technologies, which require significant capital investment. Monthly wafer production exceeds hundreds of thousands, yet yield challenges persist, increasing unit costs. Integration with GPUs and CPUs demands precise alignment and testing, raising manufacturing complexity. Additionally, supply chain disruptions in rare materials like high-purity silicon and specialized interconnects limit scalability, restraining widespread adoption despite strong demand from AI and HPC sectors.

Emerging HBM3 and 3D-stacked memory solutions offer opportunities for ultra-high bandwidth and low-latency computing applications. Cloud data centers and AI-driven platforms are increasingly adopting HBM-enabled servers, with over 45% of enterprise projects planning upgrades within the next two years. The integration of HBM with custom GPUs and FPGAs presents performance optimization potential, while energy-efficient architectures open avenues in green computing initiatives. Expansion in automotive AI and autonomous systems also creates a growing demand segment for advanced HBM solutions.

High integration requirements between memory, CPU, and GPU components present significant engineering challenges. TSV alignment, thermal management, and die stacking precision demand specialized fabrication processes. Delays in supply chain components, such as high-purity wafers and interconnects, affect production schedules. Additionally, software optimization to fully leverage HBM performance is complex, requiring advanced firmware and AI-enabled memory management. These technical hurdles constrain deployment speed and increase operational costs for memory manufacturers.

Expansion of HBM3 Adoption: Adoption of HBM3 memory stacks has increased by 48% across enterprise AI servers in 2026, enhancing throughput and reducing memory latency.

Growth in Energy-Efficient Memory Solutions: Energy-efficient HBM architectures now power 55% of data centers in North America, reducing operational energy consumption by 27%.

Integration with AI and HPC Platforms: Over 62% of new HPC clusters globally are deploying HBM-integrated GPUs, improving computational speed by 33% and optimizing performance for simulation and real-time analytics.

Regional Technological Investments: Asia-Pacific increased investments in HBM production facilities by USD 1.2 billion in 2026, driving regional adoption in cloud computing and AI-driven enterprises while Europe focuses on precision integration with HPC applications.

The High-Bandwidth Memory (HBM) Chips Market is structured across multiple segments, including product types, applications, and end-user categories. By type, the market encompasses HBM2, HBM2E, and HBM3 modules, each optimized for high-performance computing, AI workloads, and graphics-intensive applications. Application-wise, HBM chips are deployed in HPC clusters, AI/ML servers, data centers, and advanced graphics platforms, reflecting diverse adoption patterns and specialized performance requirements. End-user segmentation highlights enterprise IT, cloud service providers, research institutions, and government agencies as primary consumers. Insights indicate that adoption intensity, technological alignment, and operational requirements drive decision-making, while regional production capabilities and infrastructure readiness shape implementation strategies. The segmentation framework provides industry stakeholders with a clear understanding of priority areas, technology-specific deployment, and end-user adoption trends, allowing for targeted investment and strategic planning.

HBM2 remains the leading product type, accounting for 46% of the market, owing to its proven integration with GPUs and HPC servers for high-bandwidth applications. HBM3 is the fastest-growing type, experiencing accelerated adoption due to enhanced memory bandwidth and energy efficiency in AI and HPC workloads. Adoption of HBM3 is particularly notable in data-intensive applications such as AI training and scientific simulations, while HBM2E serves niche segments requiring moderate bandwidth improvements, together holding a combined 29% share of the market. Other specialized types, including HBM2 stacked modules with customized interconnects, contribute the remaining 25%.

High-Performance Computing (HPC) remains the dominant application, comprising 43% of the market, due to critical requirements for low-latency and high-bandwidth memory in scientific computing and simulation tasks. AI/ML servers represent the fastest-growing application segment, driven by increasing deployment in autonomous systems, NLP, and AI model training. HPC adoption is closely followed by graphics-intensive platforms and data centers, collectively contributing 31% to the application segment. Consumer adoption trends show that in 2025, over 40% of global AI research labs incorporated HBM-enabled servers into experimental workflows, while 62% of cloud service providers integrated high-bandwidth memory for real-time analytics and model deployment.

Enterprise IT remains the leading end-user segment, accounting for 39% of adoption, leveraging HBM chips in cloud infrastructure, AI research, and high-throughput computing environments. The fastest-growing end-user segment is research institutions, benefiting from HBM’s low-latency performance in simulation and experimental computing, currently achieving 28% adoption in major universities and laboratories. Other end-users include government agencies, large-scale industrial organizations, and cloud service providers, collectively making up 33% of the market. Consumer adoption statistics reveal that in 2025, 38% of global enterprises piloted HBM-integrated AI solutions for enhanced computational performance, and over 55% of Gen Z-driven tech startups prioritized HBM memory for low-latency AI applications.

North America accounted for the largest market share at 38% in 2025; however, Asia-Pacific is expected to register the fastest growth, expanding at a CAGR of 25.6% between 2026 and 2033.

North America’s dominance is driven by advanced semiconductor fabrication infrastructure, over 500,000 wafer production capacity per month, and high enterprise adoption across AI, HPC, and data center applications. The region reported over 62% of cloud providers integrating HBM-enabled servers in 2025, while Asia-Pacific’s rapid digital transformation and investment in AI-focused memory stacks are boosting adoption. Europe holds a 26% market share, with strong regulatory emphasis on energy efficiency, and South America and Middle East & Africa collectively account for 12%, showing niche adoption in industrial and energy applications. Overall, global demand reflects diverse adoption patterns, technological sophistication, and strategic investments across leading regions.

North America commands a 38% share of the HBM chips market, led by sectors such as healthcare, finance, and cloud computing. Regulatory initiatives promoting energy-efficient data centers and tax incentives for semiconductor R&D are encouraging advanced memory deployments. Digital transformation trends such as AI-driven analytics and HPC server upgrades are accelerating demand for HBM2 and HBM3 modules. Local players, including Micron and Intel, are actively developing next-generation HBM3 solutions to enhance bandwidth and reduce latency. Enterprises show higher adoption in healthcare diagnostics and financial modeling, while large-scale cloud providers are integrating HBM-enabled servers to improve throughput and computational efficiency across multiple applications.

Europe accounts for a 26% share of the HBM chips market, with Germany, UK, and France as leading adopters. Regulatory bodies enforce energy-efficiency mandates and sustainability initiatives, encouraging low-power, high-bandwidth memory solutions. Adoption of HBM2E and HBM3 in HPC clusters and AI servers is rising, complemented by digital transformation in research and industrial automation. Local companies such as ASML are investing in semiconductor process innovations to support memory integration. Enterprises in Europe prioritize explainable AI and low-latency memory, while high-performance simulation and industrial computing are key drivers, reflecting regional emphasis on regulatory compliance and operational efficiency.

Asia-Pacific ranks second globally in HBM chip consumption, accounting for 24% of the market. Leading countries include China, Japan, and India, with significant investments in semiconductor fabs and memory stack production. Infrastructure trends highlight expansion of AI and HPC research hubs, with focus on low-latency, high-bandwidth solutions. Regional tech centers in Shenzhen and Tokyo are advancing HBM3 integration with GPUs and AI accelerators. Local companies like SK Hynix are increasing HBM3 output to serve AI training centers and cloud computing providers. Regional consumer behavior shows high adoption in mobile AI applications, e-commerce, and data-intensive analytics platforms, driving demand for memory with high efficiency and bandwidth.

South America holds a 6% share of the HBM chips market, with Brazil and Argentina as leading countries. Growth is influenced by industrial automation, media production, and energy sector modernization. Government incentives for tech infrastructure upgrades and trade partnerships support memory adoption. Local firms are exploring HBM integration for AI-powered industrial analytics. Consumer behavior emphasizes media, gaming, and language localization, creating demand for low-latency, high-bandwidth memory solutions that optimize computational workloads and enhance digital experiences.

The Middle East & Africa accounts for a 6% market share, with UAE and South Africa leading adoption. Key demand drivers include oil & gas, construction, and data center infrastructure expansion. Technological modernization trends focus on AI-enabled HPC systems and energy-efficient memory stacks. Government initiatives encourage investment in digital transformation and local manufacturing partnerships. Local players are exploring deployment of HBM-enabled servers for industrial automation. Regional consumer behavior favors enterprise-grade high-performance computing for industrial monitoring and real-time analytics, supporting adoption in specialized sectors requiring low-latency memory solutions.

United States – 38% Market Share: Dominance driven by advanced semiconductor production capacity and strong enterprise demand in AI, HPC, and cloud computing.

China – 21% Market Share: Supported by high investment in memory fabrication, government-backed AI initiatives, and growing adoption in HPC and cloud infrastructure.

The High‑Bandwidth Memory (HBM) Chips market features a highly concentrated competitive environment dominated by a few major global players, while also showing pockets of innovation from emerging entities. There are approximately 20+ active competitors involved in HBM development, integration, and production, but the top five companies collectively account for an estimated 90‑95% of the overall market output in terms of supply volumes and technology influence as of 2025.

SK Hynix holds a leading position with roughly 60‑70% supply dominance, particularly through HBM3 and HBM4 stacks, supported by long‑term partnerships with GPU and AI accelerator vendors. Micron Technology has expanded its footprint significantly, increasing its share toward ~20‑25% through advanced HBM3E and next‑gen innovations, while Samsung Electronics remains a key competitor with double‑digit share and new HBM4 capacity coming online. Other competitors—though smaller in direct HBM supply—include Intel, AMD, Nvidia (as an OEM partner shaping memory requirements), Qualcomm, and Texas Instruments, contributing to ecosystem diversity by influencing design standards and integration trends.

The market’s competitive nature is oligopolistic yet dynamically evolving, with strategic initiatives like new product launches (HBM4 rollout), wafer fab expansions, and packaging innovation shaping differentiation. Collaborations between memory vendors and foundries (e.g., advanced TSV and 2.5D/3D packaging) are increasingly central to competitive positioning. Moreover, pricing strategies—such as reported HBM price increases of 20‑50% for next‑gen products—illustrate supply‑demand tightness and revenue optimization efforts across firms.

Intel Corporation

Advanced Micro Devices (AMD)

Nvidia Corporation

Qualcomm Incorporated

Texas Instruments

Renesas Electronics

Western Digital

Marvell Technology

Infineon Technologies

Nanya Technology

Broadcom Inc.

Technology continues to be the cornerstone of competitive differentiation in the High‑Bandwidth Memory (HBM) Chips Market, with current and emerging innovations shaping product performance, efficiency, and integration strategies. Leading developments focus on advances in memory stack density, bandwidth throughput, power efficiency, and heterogeneous integration with AI accelerators and high‑performance computing (HPC) platforms.

The transition to HBM4 technology is a major milestone, enabling bandwidth improvements beyond 2 TB/s and enhanced energy performance compared to previous generations. Vendors are advancing 12‑layer and multi‑die stack configurations, which increase memory capacity while maintaining low power profiles, crucial for AI inference and training workloads. Continued miniaturization and refinement in through‑silicon vias (TSVs), interposer technologies, and packaging processes facilitate higher interconnect density, reduced latency, and improved thermal characteristics essential for data‑intensive applications.

Advanced packaging ecosystems, such as Chip‑on‑Wafer‑on‑Substrate (CoWoS) and heterogeneous integration with logic dies, are also expanding HBM functionalities and compatibility across diverse chipset architectures. These technologies enhance performance for GPUs, FPGAs, and ASICs in AI, data analytics, and edge computing scenarios. The adoption of new standards, including industry‑aligned specifications for next‑generation HBM, fosters interoperability and drives ecosystem maturity.

Furthermore, emerging technologies such as Universal Chiplet Interconnect Express (UCIe) and memory‑centric computing architectures signal potential shifts toward on‑package memory models that offer significant improvements in bandwidth density and energy efficiency. These innovations aim to maintain HBM relevance in the face of evolving workloads such as large language models (LLMs) and real‑time inferencing, ensuring that HBM remains a strategic technology cornerstone for high‑performance applications in the foreseeable future.

• In January 2026, Samsung Electronics commenced production of HBM4 chips slated for supply to Nvidia’s next‑generation AI platforms, marking a significant capacity and technology expansion in high‑performance memory stacks. Source: www.reuters.com

• In Q4 2025, SK Hynix reported record quarterly operating profit of 19.2 trillion won, driven by surging AI memory demand and strong HBM sales, with its market share estimated around 61% in advanced HBM supplies. Source: www.reuters.com

• In 2025, Micron Technology announced an approximately $24 billion investment toward advanced memory fabrication facilities in Singapore, reinforcing its strategic push into HBM production and supply capacity expansion. Source: www.barrons.com

• In September 2025, SK Hynix completed internal certification for its next‑generation HBM4 chips and prepared for customer production rollout, reinforcing its competitive leadership in high‑performance memory. Source: www.reuters.com

The scope of the High‑Bandwidth Memory (HBM) Chips Market Report encompasses technology segmentation, application domains, geographic regions, and supply‑chain dynamics, providing a comprehensive framework for decision‑makers. It includes analysis of memory product types—from legacy HBM2 and HBM2E to advanced HBM3, HBM3E and emerging HBM4 stacks—highlighting performance metrics such as bandwidth throughput, stack density, energy efficiency, and integration compatibility with AI accelerators, GPUs, and HPC solutions. The report delves into application segments including AI/machine learning platforms, data centers, high‑performance computing clusters, graphics processing ecosystems, and emerging edge‑AI use cases, detailing how memory architecture choices influence system performance and workload optimization.

Geographically, the report covers regional markets across North America, Europe, Asia‑Pacific, South America, and Middle East & Africa, examining local infrastructure capabilities, regulatory landscapes, investment trends, and adoption patterns. It addresses how regional consumer behavior variations and industrial priorities—such as energy‑efficient computing in Europe, AI acceleration hubs in North America, and digital transformation initiatives in Asia‑Pacific—shape demand for high‑bandwidth memory technologies. Additionally, the scope includes competitive intelligence on key global players, strategic partnerships, innovation trajectories, and ecosystem alliances, offering insights into capacity expansions, technology roadmaps, and potential disruptions.

Emerging segments such as automotive HBM integration, tiered memory architectures, and advanced packaging standards are also evaluated, ensuring that stakeholders understand the breadth of market opportunities and technology levers influencing growth, resilience, and future strategic direction within the HBM landscape.

| Report Attribute / Metric | Details |

|---|---|

| Market Revenue (2025) | USD 317.0 Million |

| Market Revenue (2033) | USD 1,960.8 Million |

| CAGR (2026–2033) | 25.58% |

| Base Year | 2025 |

| Forecast Period | 2026–2033 |

| Historic Period | 2021–2025 |

| Segments Covered |

By Type

By Application

By End-User Insights

|

| Key Report Deliverables | Revenue Forecast, Market Trends, Growth Drivers & Restraints, Technology Insights, Segmentation Analysis, Regional Insights, Competitive Landscape, Regulatory & ESG Overview, Recent Developments |

| Regions Covered | North America; Europe; Asia-Pacific; South America; Middle East & Africa |

| Key Players Analyzed | Samsung Electronics; SK Hynix; Micron Technology; Intel; AMD; Nvidia; Qualcomm; Texas Instruments; Renesas Electronics; Western Digital; Marvell Technology; Infineon Technologies; Nanya Technology; Broadcom Inc. |

| Customization & Pricing | Available on Request (10% Customization Free) |